UPDATE: this has been resolved and the fixed grammar is in the repo linked in this message.

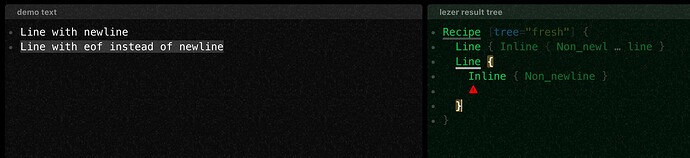

I’ve created a line-oriented grammar for a project I’m working on where each line is a separate statement in the language. I have it all working except for the last line which may not have a newline ("\n") in the input as it hits the end of file first. For example:

"foo baz\n" works vs "foo bar" which doesn’t because there is no final "\n"

I’ve looked at the Python grammar with its external tokenizer in tokens.js but when I try to create my own version of that for my grammar the parser it doesn’t work (and sometimes goes into an infinite loop and crashes the browser depending on how I set the fallback option). I must not be advancing the token stream or I’ve configured it wrong.

To help debug the problem I have created a GitHub repo with two simple line-oriented grammars, one with just newlines and one with an external tokenizer to match end of file. The repo is here and can be run via npm install && npm test:

the two example grammars are:

@top NewLineExample { line+ }

line { Foo | emptyLine }

emptyLine { whitespace* newLine }

newLine { "\n" }

Foo { "foo" whitespace Var whitespace? newLine }

Var { identifier }

@tokens {

singlespace { " " | "\t" }

whitespace { singlespace+ }

identifier { std.asciiLetter+ }

}

and

@top NewlineAndEOFExample { line+ }

line { Foo | emptyLine }

emptyLine { whitespace* newLineOrEOF }

newLineOrEOF { newline | eof }

Foo { "foo" whitespace Var whitespace? newLineOrEOF }

Var { identifier }

@tokens {

singlespace { " " | "\t" }

whitespace { singlespace+ }

identifier { std.asciiLetter+ }

}

@external tokens newlines from "./newline-or-eof-example-tokens.js" { newline, eof }

with the external tokenizer file (newline-or-eof-example-tokens.js) like this:

import {ExternalTokenizer} from "lezer"

import {

newline as newlineToken, eof

} from "./newline-or-eof-example.terms.js"

const newline = 10, carriageReturn = 13

export const newlines = new ExternalTokenizer((input, token, stack) => {

let next = input.get(token.start)

if (next < 0) {

token.accept(eof, token.start)

} else if (next === newline || next === carriageReturn) {

token.accept(newlineToken, token.start + 1)

}

}, {contextual: true, fallback: false})

The test script looks like this:

import { parser as newlineParser } from "./newline-example.js"

import { parser as newlineOrEofParser } from "./newline-or-eof-example.js"

console.log("works", newlineParser.parse("foo baz\n").toString())

console.log("works", newlineOrEofParser.parse("foo baz\n").toString())

console.log("fails", newlineParser.parse("foo baz").toString())

console.log("fails", newlineOrEofParser.parse("foo baz").toString())

and when run it outputs:

works NewLineExample(Foo(Var))

works NewlineAndEOFExample(Foo(Var))

fails NewLineExample(Foo(Var,⚠))

fails NewlineAndEOFExample(Foo(Var,⚠))

I’d appreciate any help I could get about how to fix the external tokenizer or how to match the end of file without an external tokenizer.

Working with Lezer and all the updates in CodeMirror 6 has been fantastic. Thanks for all the work!