I have a syntax that skips whitespaces, but I still requires an explicit whitespace every so often.

I find that I needed an ExternalTokenizer with { extend: true } for that. Only I find that when using the external tokenizer, precedence seems to be ignored. I have Plus and Times, where Times requires an (external) whitespace token between the first expression and the "*".

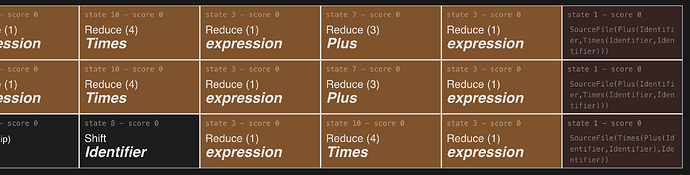

I hope this inspector shows the problem: Lezer Debugger / Michiel Dral | Observable

For the input A * B + C

(This is also a test for whether the inspector even works correctly ![]() )

)

As you can see, the Times(Plus(...)) and Plus(Times(...)) both reach the end, and I guess lezer chooses randomly. The grammar needs the "(" expression "*" ")" rule as well, where the "*" after an expression seems to be the essential part. Oddly enough, both (A * B + C*) and A + B * C both have the correct precedence…

Hope this problem makes some kind of sense ![]()

Here is the grammar:

@precedence {

times @left

plus @left

}

@top SourceFile {

expression

}

expression {

Identifier |

Plus |

Times |

MacroExpression

}

Times {

expression

!times

// I could put 'whitespace' before and after this and the

// plus operator, but this whitespace is the only one necessary

// for the effect

whitespace

"*"

expression

}

Plus {

expression

!plus

"+"

expression

}

MacroExpression {

// Necessary parts here are the opening "("

// and the 'expression "*"' without 'whitespace' in between them.

"(" expression "*" ")"

}

@skip { " " }

@external tokens layout from "./index.tokens.js" {

whitespace

}

@tokens {

Identifier { $[A-Z]+ }

"::" "()"

}

with ExternalTokenizer

import { ExternalTokenizer } from "@lezer/lr";

import * as terms from "./index.terms.js";

const CHAR_SPACE = " ".codePointAt(0);

export const layout = new ExternalTokenizer(

(input, stack) => {

if (

(input.peek(-1) === CHAR_SPACE || input.peek(0) === CHAR_SPACE) &&

stack.canShift(terms.whitespace)

) {

input.acceptToken(terms.whitespace, 0);

return;

}

},

{ extend: true }

);